To succeed with AI, you need good data, and the more the better. AI requires a lot of experimentation and new innovations come from using new data sources and high volumes of structured and unstructured data. In order to innovate at scale in a big corporation, you will need to try strategically new implementations and iterate quickly across multiple use cases with AI. The basics need to be in place when it comes to data and there is a need to gather, store, organize and clean that data efficiently. Most AI projects are delayed due to data-related issues.

AI is as good or bad as the data you use

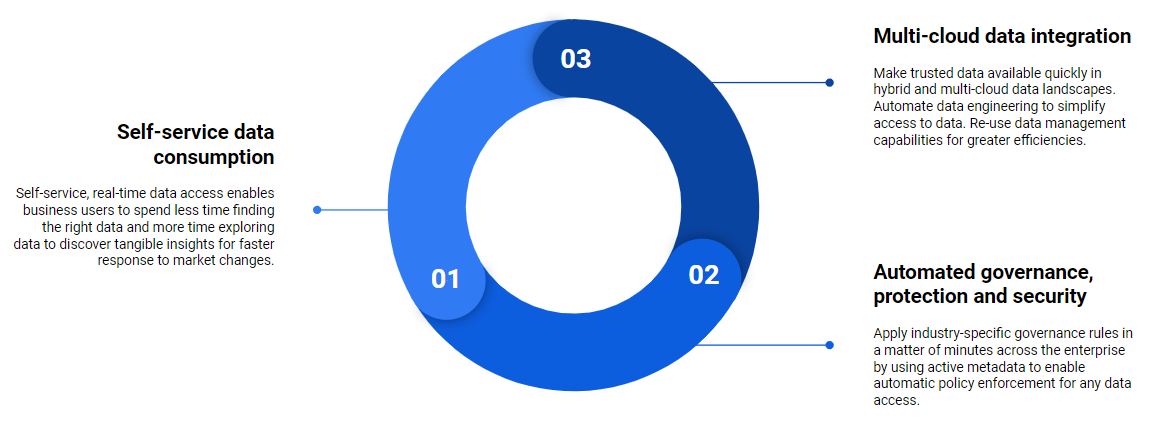

These are the three principles that I’ve seen leading companies use to scale AI experimentation by making data more accessible:

1. Self-service data consumption

Self-service, real-time data access enables business users to spend less time finding the right data and more time exploring data to discover tangible insights for faster response to market changes.

2. Multi-cloud data integration

Companies today have their data distributed across multiple applications and cloud environments. It is important to make trusted data available quickly in hybrid and multi-cloud data landscapes. Automate data engineering to simplify access to data. Re-use data management capabilities for greater efficiencies.

3. Automated governance, protection, and security

Apply industry-specific governance rules in a matter of minutes across the enterprise by using active metadata to enable automatic policy enforcement for any data access.